How to Optimize Your .NET Code for Better Performance in Software Development

To optimize .NET code for better performance in software development, developers can follow several best practices. These include measuring and improving .NET performance, avoiding the cost of freeing and allocating memory by minimizing memory allocation, exploring performance tips to improve the execution speed of a program in .NET, improving performance in ASP.NET applications by optimizing database queries, caching data, using asynchronous programming techniques, and using appropriate string concatenation method. Additionally, developers can use profiling tools to identify bottlenecks in their code and optimize it accordingly.

Use the latest .NET version

It's important to keep up with the changes and updates in the .NET platform as the development team is constantly delivering new features that can improve performance. For instance, the release of C# 11/.NET 7 included numerous improvements resulting in performance enhancements such as on-stack replacement, more efficient regexes, and enhancements to LINQ. So, staying up-to-date with the latest features can positively impact the performance of your code. [Performance Improvements in .NET 7 - .NET Blog (microsoft.com)]

Use async where possible

One of the most significant factors that can impact performance is the use of asynchronous methods. To understand the performance implications of asynchronous methods, it's important to first understand the difference between synchronous and asynchronous methods. Synchronous methods execute one at a time, in the order they are called. If one method is waiting for the I/O operation to complete, then all subsequent methods will be blocked until the first one completes. This can lead to slow performance, especially if there are a lot of I/O operations involved.

Asynchronous methods, on the other hand, allow multiple operations to be executed concurrently. When an asynchronous method is called, it does not block the calling thread. Instead, the method starts executing on a separate thread, allowing the calling thread to continue executing other operations. Once the asynchronous method completes, it signals the calling thread to continue execution.

Not using asynchronous methods in an ASP.NET application can have a number of performance implications. Here are a few:

- Decreased Throughput: Synchronous methods can only handle one request at a time. This can lead to decreased throughput, especially if there are a lot of I/O operations involved.

- Increased Resource Usage: When a thread is blocked while waiting for an I/O operation to complete, it is still consuming resources. This can lead to increased resource usage and slower performance.

In order to optimize the performance of the application, it is crucial to incorporate asynchronous methods in suitable scenarios. This involves selecting the async version of a method and arranging the code effectively to maximize the benefits of asynchronous execution. One approach to achieving this is to avoid awaiting each async operation individually:

var data1 = await GetData1Async(ct);

var data2 = await GetData2Async(ct);

and use Task.WhenAll(...) method to run all tasks in parallel:

var data = await Task.WhenAll(

GetData1Async(ct),

GetData2Async(ct)

);

Optimize data access

Most applications are created to manipulate data and utilize some form of data storage such as SQL or Mongo Database. In the case of relational databases, the Data Access Layer library, like EF Core, is commonly used as an Object-Relational Mapper (ORM). To achieve optimal performance, it is important to adhere to best practices when working with the Data Access Layer.

Avoid lazy-loading

Lazy loading is a technique used to delay the loading of related data until it is required. This can improve the performance of the application by reducing the number of database queries made. However, lazy loading can also lead to the n+1 problem, where a query is executed for each instance of an object being loaded. This can result in a large number of database queries and significantly slow down the application. To avoid the n+1 problem, eager loading can be used to load all required data in a single query or batch loading can be used to load multiple objects at once.

Use projection

Projection is a technique used in ORM to read data from a database in a more efficient and selective manner. Instead of fetching the entire object graph, which can include many unnecessary fields, projection allows only the required fields to be retrieved from the database.

Using projection can improve application performance by reducing the amount of data transferred between the database and the application, resulting in faster query execution times. It can also reduce memory usage and improve scalability, as less data needs to be stored in memory.

var posts = context.Posts.ToList();

// Use projection to retrieve only the required fields:

var postTitles = context.Posts.Select(p => p.Title).ToList();

Avoid Cartesian Explosion

The Cartesian explosion problem is a performance issue that can occur when multiple tables with a large number of records are joined, resulting in a large number of rows being returned. This can cause the application to slow down or even crash due to memory and performance issues.

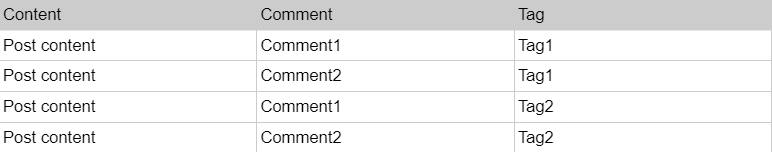

As an example, consider the data structure of a blog post

When eagerly loading the Tags and Comments of the BlogPost entity, the resulting query will contain multiple joins, resembling the following

select [blogPost].[Content], [comment].[Content] [Comment], [tag].[Value] [Tag]

from [dbo].[BlogPost] [blogPost]

join [dbo].[Comment] [comment] on [comment].[BlogPostId] = [blogPost].[Id]

join [dbo].[Tag] [tag] on [tag].[BlogPostId] = [blogPost].[Id]

That will return a cartesian product of rows with a bunch of duplicated data

To minimize the time and resources used, it is advisable to use multiple select queries or similar mechanisms incorporated in ORM libraries such as splitting queries in EF Core 5 or later versions. This helps avoid the transfer of excessive data over the network and allocation in application memory.

context.Posts

.Include(x => x.Comments)

.Include(x => x.Tags)

.AsSplitQuery();

Skip tracking entities if not necessary

Tracking entities means that the ORM keeps track of any changes made to the retrieved entities and persists them to the database upon calling the SaveChanges() method. However, in scenarios where we only need to retrieve data without modifying it, tracking entities can be resource-intensive and slow down the performance of our application.

To avoid tracking entities, we can use methods such as AsNoTracking() or configure the context to not track entities by default. This approach can improve the performance of our application by reducing the overhead of tracking changes to the retrieved entities that we don't intend to modify.

// Using AsNoTracking():

var nonTrackedPosts = context.Posts.AsNoTracking().ToList();

// Configuring the context to not track entities by default:

public class MyDbContext : DbContext

{

public MyDbContext(DbContextOptions<MyDbContext> options) : base(options) { }

protected override void OnConfiguring(DbContextOptionsBuilder optionsBuilder)

{

optionsBuilder.UseQueryTrackingBehavior(QueryTrackingBehavior.NoTracking);

}

public DbSet<Post> Posts { get; set; }

}

Use sealed types

Sealed types in .NET are classes or structs that cannot be inherited by other classes or structs. When a type is sealed, it cannot be further extended or modified by any other class or struct, which means that the compiler can perform certain optimizations that are not possible with non-sealed types.

Using sealed types in .NET can lead to improved performance because the compiler can optimize the code more efficiently. For example, when the compiler knows that a type is sealed, it can eliminate certain virtual table lookups, which can improve performance by reducing the number of instructions that need to be executed. Additionally, sealed types can help to reduce memory overhead, as the runtime does not need to allocate memory for additional method tables or other data structures that would be required if the type was not sealed.

This can be observed in a basic benchmark

public class SealedClassBenchmark

{

Sealed @sealed = new Sealed();

NonSealed nonSealed = new NonSealed();

[Benchmark]

public int SealedClass() => @sealed.DoWork() + 4;

[Benchmark]

public int NonSealedClass() => nonSealed.DoWork() + 4;

private class BaseClass

{

public int DoWork() => GetNumber();

protected virtual int GetNumber() => 2;

}

private sealed class Sealed: BaseClass

{

protected override int GetNumber() => 1;

}

private class NonSealed: BaseClass

{

protected override int GetNumber() => 3;

}

}

The results indicate that using a sealed class provides a significant advantage in terms of execution time for virtual methods compared to non-sealed classes.

BenchmarkDotNet=v0.13.5, OS=Windows 11 (10.0.22621.1555/22H2/2022Update/SunValley2)

11th Gen Intel Core i7-11800H 2.30GHz, 1 CPU, 16 logical and 8 physical cores

.NET SDK=7.0.202

[Host] : .NET 7.0.4 (7.0.423.11508), X64 RyuJIT AVX2

DefaultJob : .NET 7.0.4 (7.0.423.11508), X64 RyuJIT AVX2

| Method | Mean | Error | StdDev | Median |

|--------------- |----------:|----------:|----------:|----------:|

| SealedClass | 0.0702 ns | 0.0259 ns | 0.0673 ns | 0.0480 ns |

| NonSealedClass | 1.2676 ns | 0.0477 ns | 0.1187 ns | 1.2369 ns |

Avoid Exceptions

Exceptions can be computationally expensive and negatively impact the performance of an application. When an exception is thrown, the program must stop its normal flow and begin to execute the exception handling code. This process can slow down the program's execution and impact its overall performance.

Additionally, handling exceptions can also be resource-intensive, requiring additional memory and processing power. Therefore, it's important to avoid throwing unnecessary exceptions and instead use other programming techniques, such as conditional statements or error codes, to handle errors and exceptions in a more efficient and performant manner.

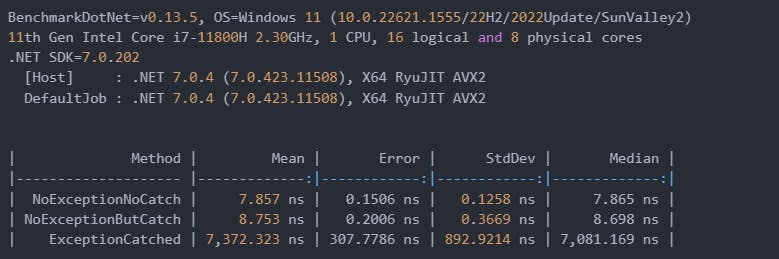

The benchmark provided evaluates the impact of exception handling code on the execution time.

public class ExceptionBenchmark

{

public Random Random = new();

[Benchmark]

public bool NoExceptionNoCatch()

{

return DoWorkNoException();

}

[Benchmark]

public bool NoExceptionButCatch()

{

try

{

return DoWorkNoException();

}

catch (Exception)

{

return false;

}

}

[Benchmark]

public bool ExceptionCatched()

{

try

{

return DoWorkThrowException();

}

catch (Exception)

{

return false;

}

}

private bool DoWorkNoException() => DoSomething() > 10;

private bool DoWorkThrowException()

{

DoSomething();

throw new Exception();

}

private int DoSomething() => Random.Next(20);

}

The results indicate that including a try-catch block has minimal impact on the execution time while throwing an exception can significantly decrease performance.

Caching

Caching can be a valuable tool to enhance an application's performance and scalability by reducing the workload required to generate content. It is most effective for data that changes infrequently and requires significant effort to generate. Caching creates a copy of the data, which can be retrieved much more quickly than from the original source. However, applications should be designed and tested to not rely solely on cached data.

ASP.NET Core offers several caching options, with the most straightforward one being the IMemoryCache.

public DateTime GetTime()

{

if(!memoryCache.TryGetValue(CacheKeys.Entry,out DateTime cacheValue))

{

cacheValue = DateTime.Now;

This cache stores data in the server's memory, so for applications running on multiple servers, sticky sessions should be implemented to ensure that requests from a client are directed to the same server consistently.

String Concatenation

A lot has been discussed regarding the use of StringBuilder class for manipulating strings, but is it always the most optimal choice? In the .NET framework, there are several methods available for string concatenation, and it is crucial to pick the right one based on the specific scenario.

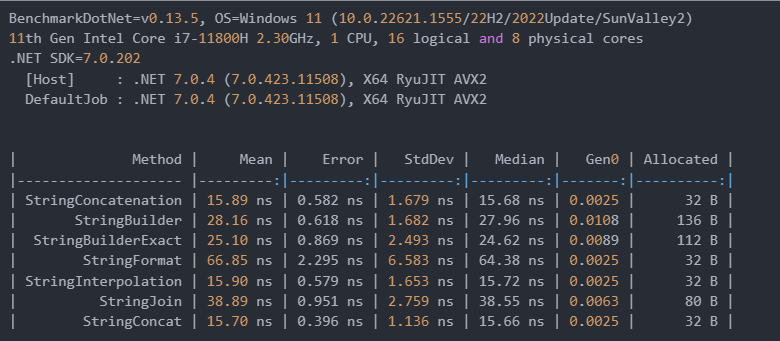

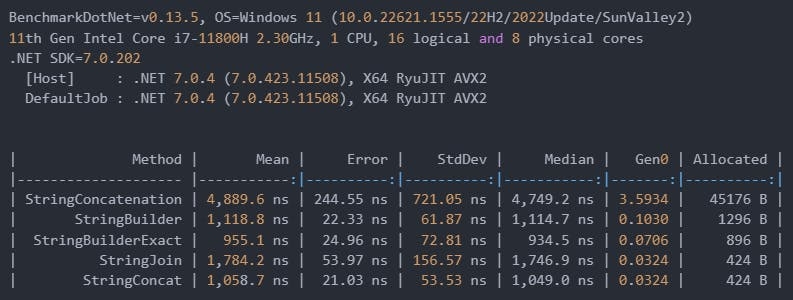

Performance benchmarks have been conducted on various solutions for concatenating a small number of strings - 3 values and a string array with 200 entries.

[MemoryDiagnoser]

public class StringConcatenationBenchmark

{

private string str1;

private string str2;

private string str3;

public StringConcatenationBenchmark()

{

var random = new Random();

str1 = random.Next(10).ToString();

str2 = random.Next(10).ToString();

str3 = random.Next(10).ToString();

}

[Benchmark]

public string StringConcatenation() => str1 + str2 + str3;

[Benchmark]

public string StringBuilder() =>

new StringBuilder(str1).Append(str2).Append(str3).ToString();

[Benchmark]

public string StringBuilderExact() =>

new StringBuilder(3).Append(str1).Append(str2).Append(str3).ToString();

[Benchmark]

public string StringFormat() => string.Format("{0}{1}{2}", str1, str2, str3);

[Benchmark]

public string StringInterpolation() => $"{str1}{str2}{str3}";

[Benchmark]

public string StringJoin() => string.Join(string.Empty, str1, str2, str3);

[Benchmark]

public string StringConcat() => string.Concat(str1, str2, str3);}

``

According to the findings, concatenating 3 strings using the '+' operator is just as efficient as using string interpolation or the String.Concat method. On the other hand, StringBuilder incurs additional overhead in terms of time and memory consumption.

In scenarios where a larger number of strings are involved, such as the benchmark with 200 strings, string concatenation seems to consume a significant amount of processor time and memory compared to other methods.

Conclusion

In conclusion, optimizing the performance of .NET code is an essential aspect of software development. By following the performance optimizations mentioned in this article, developers can significantly improve the speed, scalability, and responsiveness of their .NET applications.

Sign up to Our Insights Newsletter

We will send you an e-mail whenever we upload new article. Be the first one to get the news about latest design and tech trends.

I agree to receive marketing and/or commercial information from Qodeca Sp. z o.o. with registered seat in Warsaw via electronic means to the E-mail address indicated by me. I acknowledge that my consent may be withdrawn at any time. I confirm that I have read Privacy Policy.

Detailed information regarding the scope, manners, purposes, and legal grounds for the processing of your personal data as well as rights to which the data subjects are entitled are provided in the Privacy Policy of our website. We encourage you to familiarise yourself with these contents.

You can unsubscribe from our newsletter at any time.

We respect your privacy. Your e-mail address will only be used to send our newsletter.